How Does ChatGPT Actually Work: The Technical Side of AI Writing

In the realm of technology, few innovations have captured people's attention like ChatGPT. Many have said that the advent of generative AI will cause a paradigm shift in the way we work, live and interact with one another.

With a staggering one million users in its first five days, endless discussions in tech circles and so many exciting AI writing tools being launched, this natural language processing (NLP) model has become the talk of the town.

But how does it work?

In this article, we will take a deep dive into how ChatGPT works to understand its mechanics and potential benefits. Additionally, we'll explore common machine learning techniques such as supervised learning and reinforcement learning that contributed to making ChatGPT so remarkable.

Understanding Natural Language Processing (NLP)

Before delving into intricacies, let's begin with the foundation: NLP. NLP is a subfield of artificial intelligence that focuses on enabling computers to comprehend, interpret, and generate human language effectively.

As you might already know, everyday applications like autocorrect or grammar suggestions make use of NLP algorithms behind the scenes. However, ChatGPT takes NLP to new heights by pushing the boundaries of how computers handle language.

Some other examples of NLP applications include voice assistants, language translation, sentiment analysis, and text summarization. These applications rely on complex algorithms that analyze and understand human language in order to perform tasks or provide accurate responses.

Supervised Learning and Reinforcement Learning

One of the key techniques used in training ChatGPT is supervised learning.

In this approach, human experts provide examples of input-output pairs to teach the model how to respond appropriately. These pairs consist of a prompt (input) and the desired response (output).

However, since generating responses is an open-ended task with countless possible answers, ChatGPT also utilizes reinforcement learning.

This technique allows the model to further improve its responses through trial and error. The model receives feedback based on how well it generates responses and uses this feedback to adjust its parameters and make more informed decisions in the future. This iterative process helps the model continuously refine its understanding and output.

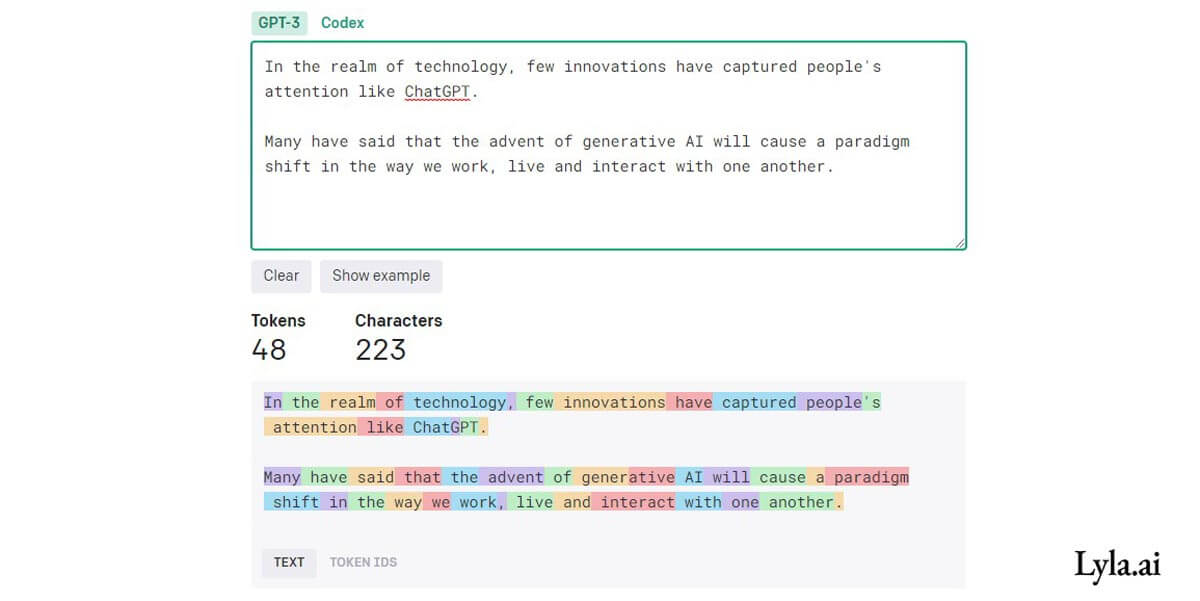

Segmentation and Tokenization

Computers don't naturally understand languages like English or Spanish—they operate through numbers and mathematical operations. So how can they grasp what humans are saying or writing? This is where segmentation and tokenization come into play.

Segmentation involves breaking down sentences into smaller units or segments that can be processed by an NLP model.

These segments can be individual words or tokens—a term frequently mentioned in connection with ChatGPT pricing models (charges per 1000 tokens). Once segmented, tokenization comes next: converting words into a standardized format such as lowercase for uniformity.

Removing Common Words

Not all words contribute equally to sentence meaning—some are just noise cluttering up space for analysis without adding value.

By removing common words that offer little semantic significance from sentences (e.g., prepositions or articles), NLP models can minimize unnecessary data to improve performance and efficiency.

Following this step, our example sentence "I am learning about ChatGPT right now" becomes "I am learning ChatGPT."

Stemming and Lemmatization

To further enhance the model's ability to generalize and recognize patterns, stemming or lemmatization (dictionary-based word form reduction) techniques can be employed.

Stemming involves reducing words to their core meaning by removing suffixes. For our sentence, "learning" might become "learn."

Similarly, lemmatization carries out similar processes but goes a step further by reducing words to their dictionary forms—converting "am" to "be," for instance.

Speech Tagging and Named Entity Recognition

To establish a deeper understanding of the sentence structure and content, NLP models implement techniques known as speech tagging and named entity recognition.

Speech tagging assigns specific tags (e.g., noun, verb, adjective) to each token in a sentence. These tags provide insights into the syntactic structure of the sentence.

On the other hand, named entity recognition identifies and classifies named entities like people's names, organizations, or locations within a given sentence.

Generative Pre-trained Transformers (GPT)

Now that we've unraveled some of the underlying concepts of NLP let's focus on what makes ChatGPT truly unique: Generative Pre-trained Transformers (GPT).

The term 'generative' signifies its ability to generate human-like language or text responses. 'Pre-trained' refers to GPT being initially trained on an extensive dataset combining various sources—this training sets the stage for its subsequent operations.

Transformers: The Heart of GPT

Transformers serve as the key breakthrough behind technologies like ChatGPT. Introduced by OpenAI in 2017 through their paper titled "Attention is All You Need," transformers adopt self-attention mechanisms that allow models to prioritize relevant information during both input processing and output generation.

This attention to context enables transformers to process input sequences of any length efficiently, outperforming older techniques like recurrent neural networks and convolutional neural networks.

The Encoder-Decoder Duo

A transformer consists of two main components: an encoder and a decoder. The encoder goes through the segmentation, tokenization, removal of common words, stemming/lemmatization, speech tagging, and named entity recognition steps described earlier.

It converts the input sentence into a numerical or vector-based representation that captures its meaning and structure in a compact form.

The decoder takes the encoded representation from the encoder as input and generates an output sequence based on this information.

In ChatGPT's case, it focuses on sequence-to-sequence transformations—feeding in one sentence or question and producing another sentence or an answer.

Training ChatGPT

Training ChatGPT was no easy feat for OpenAI. They employed a combination of machine learning techniques to fine-tune the model's performance: supervised learning, reinforcement learning, and optimizing against reward models.

In supervised fine-tuning, prompt-style inputs were created where humans described the desired output for each input to train the model effectively. The model studied these prompts' linguistic patterns to better understand what was expected.

Additionally, OpenAI used reinforcement learning—a technique inspired by how animals learn through trial-and-error interactions with their environment—to optimize ChatGPT further.

A reward model was established in which labels ranked outputs from best to worst based on their quality compared to gold-standard responses.

Through repeated iterations of adjusting policies based on rewards and penalties received from various prompt-outputs combinations fed into ChatGPT—the model gradually improved its ability to generate.

The amount of data used was massive, consisting of 340GB of text from diverse sources on the internet. OpenAI took measures to ensure the quality and safety of the dataset by carefully filtering and preprocessing it.

The Challenges

Training ChatGPT had its challenges, such as addressing biases in the responses generated by the model. OpenAI implemented a two-step process to mitigate this issue.

First, they fine-tuned the model using data from human AI trainers who followed guidelines specifically designed to reduce biased behavior. Then, they made use of reinforcement learning from human feedback (RLHF) to further improve system performance and address potential biases.

This comprehensive training approach allowed OpenAI to create a more reliable and safe version of ChatGPT that can generate helpful and coherent responses across a wide range of topics.

Another challenge they faced was improving the model's ability to provide accurate and trustworthy information. OpenAI tackled this issue by incorporating a feature called "Model Cards" that provides details about ChatGPT's capabilities and limitations. This transparency allows users to better understand and interpret the model's responses.

Additionally, OpenAI actively encourages user feedback to identify any remaining issues or biases in the system. They are continuously working on refining ChatGPT and making regular updates to address these concerns.

Final Thoughts: Welcome to a New Paradigm

ChatGPT represents more than just an impressive AI-powered tool—it marks a significant milestone in technological advancements across various domains like art, business, and technology itself.

As we continue progressing with NLP models empowered by generative pre-trained transformers, abundance of possibilities emerge, paving the way for a second Renaissance.

By understanding the foundational aspects of NLP, the workings of transformers, and the training methods employed by OpenAI to create ChatGPT, you gained valuable insights into how this groundbreaking technology takes shape.

Armed with this knowledge, you can navigate and utilize ChatGPT more effectively while staying informed about future developments in the field—a key to seizing new opportunities in an increasingly AI-driven world.